When it comes to faces, context matters

New study sheds light on underlying effects of visual settings on brain processing and facial perception

How we perceive faces depends on the context, but why is that?

Humans experience this phenomenon daily. We look at hundreds of faces and make a judgment.

Until recently, we had little knowledge of how our brain computes signals to skew our interpretation of faces. However, a recent study involving UC Davis Health researchers has identified the brain mechanisms that play a role in how we connect outside contexts to facial recognition and perceptions. Previous research has shown that memories of our daily experiences influence the context of a place or event.

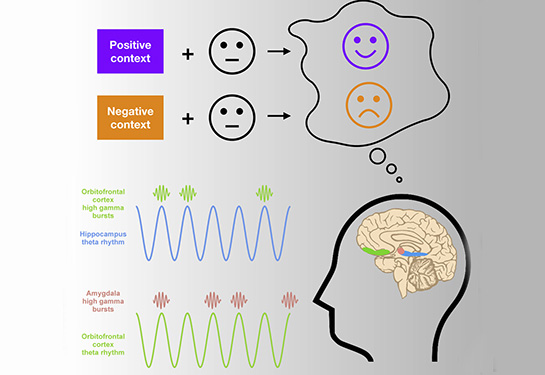

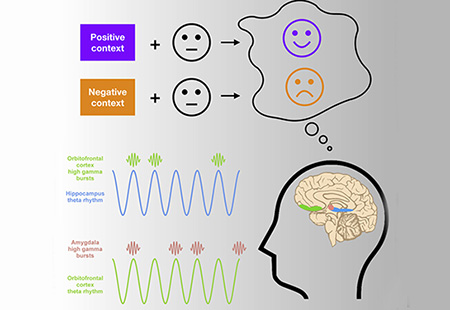

“We perceive a person as a possible arsonist if their face is seen alongside a burning building. But putting that same face on a beach or next to a dog makes it more likely that the face will be perceived as friendly and happy,” said Jack J. Lin, professor of neurology and director of the UC Davis Comprehensive Epilepsy Program

The new study gathered data from epilepsy patients who volunteered to take part in the research. They had electrodes implanted inside their brain for seizure monitoring. This innovation provided a rare window into the human brain.

“Our study reveals moment-to-moment network dynamics underlying the contextual modulation of facial interpretation in humans, a high-level cognitive process critical for everyday social interactions,” said Jie Zheng, research fellow at Harvard Medical School and the first author of the study. (Zheng completed the study while at UC Irvine.)

Prior research has revealed that context-specific variations involve the amygdala, the hippocampus, and the orbitofrontal cortex parts of the brain, collectively referred to as the amygdala-hippocampal-orbitofrontal network (network). But how these different areas interacted was not well understood – until now.

Our study reveals moment-to-moment network dynamics underlying the contextual modulation of facial interpretation in humans, a high-level cognitive process critical for everyday social interactions.” —Jie Zheng, the first author of the study

Understanding our bias

The researchers presented to the participants a series of photos with emotional contexts such as a dog running through a field or a set of hands opening a door through broken glass and, within a few seconds, a face. They asked the patients to rank if the face was more positive or negative. At the same time, the electrodes were measuring the interactions between the hippocampus and the amygdala brain regions essential for emotion and memory, with the orbitofrontal cortex, a brain region critical for decision making and value judgement.

The researchers focused on two types of brain waves: theta and gamma waves. Theta waves are slower brain waves (about 8 cycles per second) and are important for communication between brain regions. Gamma waves are much faster (more than 75 cycles per second) and reflect the activity of a local population of neurons within a brain region.

Participants tended to rate a face paired with a threatening photo more negatively, but the same face paired with a happy background more positively.

“In essence, the brain learns to bind together the face with the background photo to bias our perception of the face,” Lin explained.

The researchers were able to measure how the brain was computing signals between each of these areas.

Using the electrodes implanted in the brain, the researchers could record two-way theta-gamma wave interactions in the brain’s emotion, memory and decision networks. In observing the coordination between the theta-gamma coupling between these brain regions, researchers successfully predicted how the participants perceived the face they were observing even before seeing the participants’ responses.

“In the future, hopefully, we can help people modulate behavior based on the brain waves,” Lin said. “This could be a healthy or a pathological thing, like with PTSD (post-traumatic stress disorder) for example, where someone has been conditioned to an acute response based on a previously experienced context.”

By showing the dynamics between different parts of the human brain, this landmark study reveals how various emotional events are coded to shape our perception and therefore guide social behavior. It also opens the possibility of modulating human responses by first understanding the underlying neural signals.

Co-authors: Ivan Skelin, University of California, Davis

This research is funded by the National Institutes of Health BRAIN (Brain Research Through Advancing Innovative Neurotechnologies) Initiative (U01 and U19).